‘Empathic’ AI in mental health care: Safety, vulnerability, and the future of emotional support systems

Human beings are social and dependent creatures. We rely on friends, romantic partners, family, communities, therapists, and other confidantes for support, insight, and understanding. And yet, we have recently entered an era in which many now seek support from artificial agents powered by generative AI, including text-based LLMs and socially assistive robots (SARs) that mimic human emotionality. These ‘empathic’ agents are increasingly used to simulate roles we once thought only human beings could play.

This project seeks to identify whether ‘empathic’ AI (EAI) can be safely and responsibly integrated into contemporary and emerging systems of care, or if, alternatively, its risk profile overshadows potential benefits. Three specific use cases are examined:

1. Text-based LLMs for mental health treatment and support;

2. Text-based LLMs for emotional or romantic companionship; and

3. Socially assistive robots (SARs) for aged care.

The project will identify the risks and benefits of these use cases, with a focus on clinically and/or socially vulnerable populations.

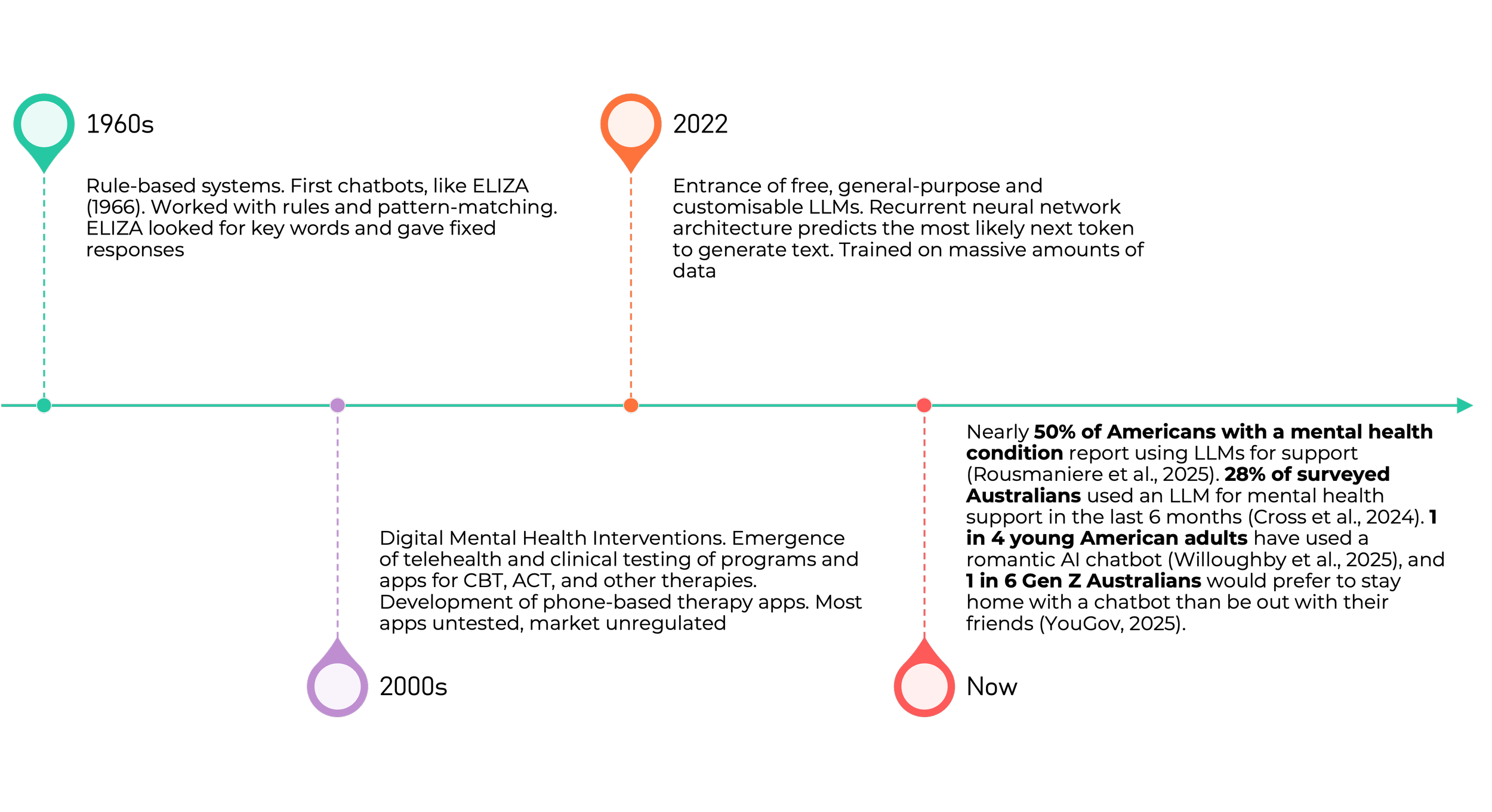

Above: A brief history of the emergence of the ‘digital therapist’ (Walsh, Forthcoming).